Your data warehouse is only valuable if its contents are utilized. For your data to be usable, you must consider how they are presented to end users and how quickly they can answer queries. In this post, we will discuss how to construct data models that are easier to maintain, more helpful, and have better performance. In the realm of data and analytics, data modeling has gained increasing prominence.

Data analysts without a background in data engineering may now participate in building, defining, and constructing data models for use in business intelligence and analytics tasks, thanks to modern technologies and tools. The word "data modeling" can be interpreted in a variety of ways. Data modeling will be defined as the process of developing data tables for usage by users, BI tools, and applications.

With the advent of the contemporary data warehouse and ELT pipeline, many of the traditional norms and holy cows of data modeling are no longer applicable and, in some cases, even harmful.

In this blog, we will examine the current best practices for data modeling from the perspective of data analysts, software engineers, and analytics engineers who construct these models.

What is Data Modeling

Approximately 70% of software development initiatives fail due to early coding. Data modeling assists in characterizing the structure, connections, and limitations pertinent to accessing data, and encodes these rules into a standard that is re-usable.

Preparing a comprehensive data model requires familiarity with the process and its advantages, the many types of data models, best practices, and related software tools.

A data model is a tool for describing the fundamental business rules and data definitions associated with data. Data Modeling provides business and technical stakeholders with a clear, visual representation of complicated data ideas, to their benefit.

Why are Data Modeling Best Practices Important

The activities of every contemporary, data-driven organization create a large quantity of data. Due to differences in business activities, systems, and procedures, the data must be appropriately consolidated, cleaned to eliminate noise, and converted to allow meaningful analytics.

To achieve this goal, it is required to execute a Data Modeling exercise to arrange the data consistently and save it in a way that can be utilized for several reasons.

In addition, an efficient data model offers a stable basis for any Data Warehouse, allowing it to accommodate expanding data quantities and readily accommodate the addition or deletion of data entities.

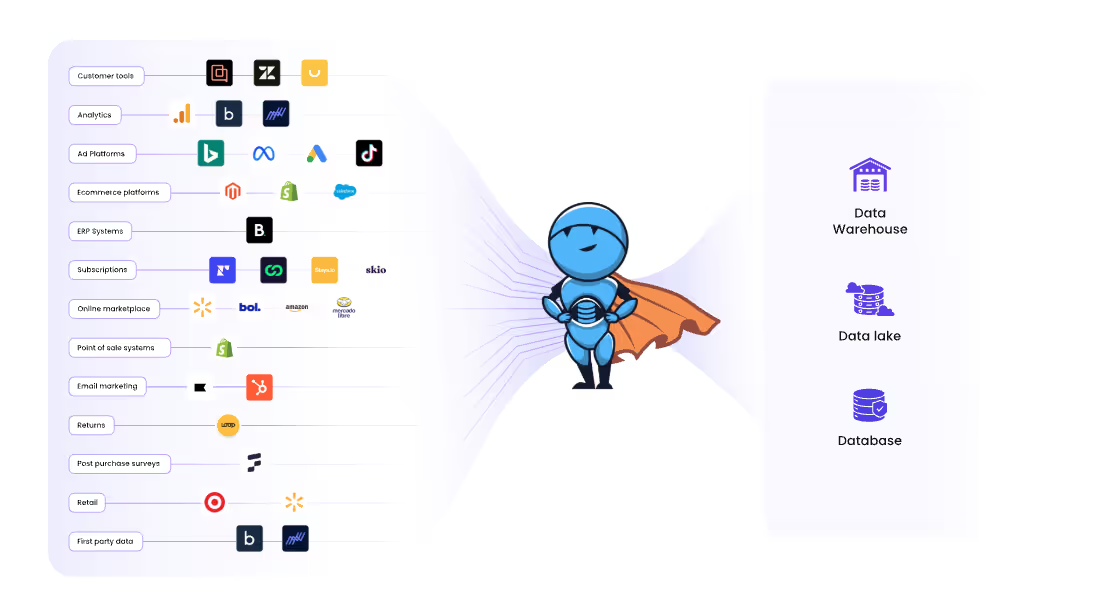

Watch below video to see how Saras Analytics helps Omnichannel brands turn fragmented data into powerful insights!

4 Best Practices for Data Modeling

There are four principles and best practices for data modeling design to help you enhance the productivity of your data warehouse:

Data Modeling Best Practices #1: Grain

Indicate the level of granularity at which the data will be kept. Usually, the least proposed grain would be the starting point for data modeling. Then, you may modify and combine the data to obtain summary insights.

Data Modeling Best Practices #2: Naming

Naming things remains a problem in data modeling. The ideal practice is to pick and adhere to a naming scheme.

Utilize schemas to identify name-space relations, such as data sources or business units. For instance, you might use the marketing schema to hold the tables most relevant to the marketing team, and the analytics schema to store advanced concepts such as long-term value.

Data Modeling Best Practices #3: Materialization

It is one of the most important tools for constructing an exceptional data model. If you build the relation as a table, you may precompute any required computations, resulting in faster query response times for your user base.

If you expose your relation as a view, your users' queries will return the most recent data sets. Nonetheless, reaction times will be sluggish. Depending on the data warehousing strategy and technologies you employ, you may have to make various trade-offs according to actualization.

Data Modeling Best Practices #4: Permissions and Governance

Data modelers should be aware of the varying rights and data governance requirements of the enterprise. Working collaboratively with your security team to verify that your data warehouse adheres to all applicable regulations would be beneficial.

For instance, firms that deal with medical data sets are subject to HIPAA data authorization and privacy rules. All customer-facing internet firms should be aware of the EU General Data Protection Regulation (EU GDPR), and SaaS enterprises are frequently constrained in their ability to exploit client data depending on the terms of their contracts.

3 Types of Data Models

The conceptual data model is the initial stage in comprehending the organization's operations. It aids in the documentation of how the firm functions, essential business principles, and how it all works to support business operations. It is a communication technique that Data Architects use to convey information to corporate audiences, particularly executives and important stakeholders.

After completing the conceptual data model, the next level of detail is the logical data model. The logical data model explains data structures, their connections, and the properties of each item in more detail.

To construct databases, the database development team requires physical data models. This level contains keys, constraints, main and foreign key associations, and specific data types for every attribute.

Techniques to Boost Your Data Modeling

These 10 approaches will assist you in enhancing your data modeling and its usefulness to your organization.

Comprehend the Business Requirements and Required Outcomes

The purpose of data modeling is to improve an organization's operation. As a data modeler responsible for gathering, organizing, and storing data for analysis, you can only achieve this objective if you understand the enterprise's requirements. Often, the greatest data modeling problem is accurately capturing business needs to determine which data to prioritize, gather, store, process, and make available to users.

Therefore, we cannot stress this enough: obtain a thorough grasp of the requirements by inquiring about the desired outcomes from the data. Then organize your data with these objectives in mind.

Visualize the to-be-Modeled Data

Observing many rows and columns of alphabetic entries is unlikely to result in insight. Most people are far more at ease with graphical representations of data that make it easy to spot abnormalities or with drag-and-drop interfaces that allow them to swiftly analyze and merge data tables.

These techniques to data visualization assist you clean your data so that it is comprehensive, consistent, and free of mistake and redundancy. In addition, they assist you identify distinct data record types that correspond to the same real-world object (such as "Customer ID" and "Client Ref. "), allowing you to modify them to utilize common fields and formats, so making it simpler to merge several data sources.

Commence with Simple Data Modeling and Expand Thereafter

Due to considerations including quantity, nature, structure, growth pace, and query language, data can quickly become complicated. Keeping data models modest and straightforward at the outset facilitates the correction of errors. When you are certain that your early models are reliable and informative, you may incorporate more datasets, reducing discrepancies as you go.

You should search for a tool that makes it simple to get started, but can accommodate extremely massive data models later, and that allows you to easily "mash-up" many data sources from various places.

Deconstruct Business Inquiries into Specifics, Dimensions, Filters, and Order

Understanding how these four parts constitute business questions can help you organize data in a manner that makes it simpler to deliver responses. For instance, say your business is a retail chain with several locations, and you need to determine which stores sold the most of a certain product over the last year.

In this instance, the facts would be the overall historical sales data (all sales of all products from all stores for each day over the past "N" years), the dimensions considered would be "product" and "store location", the filter would be "previous 12 months", and the order could be "top five stores in descending order of sales of the given product."

By structuring your data using separate tables for facts and dimensions, you simplify the analysis to identify the top sales performers for each sales period and to address other business intelligence queries.

Use Only the Necessary Data Rather Than All Available Data

Computers dealing with massive datasets can quickly encounter memory and input-output performance issues. In many instances, only a tiny subset of the data is required to address business queries.

Ideally, you should be able to identify which sections of datasets are to be used by simply checking boxes on-screen, allowing you to minimize data modeling waste and performance difficulties.

Perform Calculations Ahead of Time to Prevent User Disagreements

A primary objective of data modeling is to construct a single version of the truth against which users may pose business inquiries. People may have differing ideas on how an answer should be applied, but there should be no controversy regarding the facts or math utilized to arrive at the result.

For instance, a computation may be necessary to aggregate daily sales data into monthly statistics, which may then be compared to reveal the best and worst months. You may prevent difficulties by putting up this computation in advance as part of your data modeling and having it available in the dashboard for end users, as opposed to requiring everyone to use a calculator or spreadsheet tool (both of which are major causes of user mistake).

Before Continuing, Verify Each Stage of your Data Modeling

Before proceeding to the next stage, each activity should be reviewed, beginning with the data modeling priorities derived from the business requirements.

For instance, a dataset must have an attribute called the primary key so that each record may be uniquely recognized by the value of the main key in that record. Suppose you choose "ProductID" as the primary key for the aforementioned historical sales dataset.

This may be validated by comparing the total number of rows for "ProductID" in the dataset to the total number of unique (no duplicates) rows. If the two counts are same, "ProductID" may be used to uniquely identify each record; otherwise, another primary key must be identified. The same method may be applied to a joining of two datasets to ensure that their relationship is either one-to-one or one-to-many and to avoid many-to-many interactions that result in unnecessarily complicated or unmanageable data models.

Focus on Causation, Not Correlation

Data modeling contains use recommendations for the modeled data. While allowing end users to access business analytics on their own is a significant step forward, it is vital that they refrain from leaping to incorrect assumptions.

For instance, they may observe that the sales of two distinct items appear to grow and decline in tandem. Are the sales of one product causing the sales of another (a cause-and-effect relationship) or do they just rise and fall together (a simple correlation) due to an external factor such as the economy or the weather? Confusion between cause and correlation might lead to the targeting of incorrect or nonexistent opportunities, so squandering corporate resources.

Utilize Intelligent Tools to Do the Heavy Lifting

Complex data modeling may need coding or other procedures to handle data prior to analysis. However, if such "heavy lifting" can be performed by a software application, you are freed from the requirement to master many programming languages and may devote your time to other enterprise-beneficial activities.

All steps of data ETL can be facilitated or automated by a proper software application (extracting, transforming, and loading). Data can be accessible graphically without the need for scripting, various data sources can be combined using a simple drag-and-drop interface, and data modeling can even be performed automatically based on the query type.

Make Your Data Models Evolve

Business data models are never set in stone since data sources and business goals are ever-changing. You must therefore anticipate upgrading or altering them over time.

Use a data dictionary or "ready reference" with clear, up-to-date information on the purpose and structure of each piece of data to put your data models in a repository that facilitates their growth and change.

Future of Data Modeling Cloud

The field of data modeling is developing, and with the growth of cloud storage and computation, it will only continue to grow and improve. Due to the promising outcomes the two gives when combined, data modeling for data warehouse will soon be an enterprise-wide priority. The two procedures will continue to offer value to organizations and improve their long-term planning.

These strategies give clear lines of cross-functional communication and comprehension in a technical world that is always growing and where these ties will only become more useful.

As organizational infrastructures migrate to the cloud, data modeling software assists stakeholders in making educated decisions regarding what, when, and how data should be migrated.

Understanding how to implement technologies such as power BI models, ELT, data storage, data migration, and data streaming, among others, begins with a commitment to modeling the underlying data and the factors that contribute to its existence.

Related Read: 5 Useful Tips for Big Data Migration

Data modeling is, at its heart, a paradigm of data comprehension prior to analysis or action. With current data modeling strategies, such as codeless models, visual model construction, representative data shaping, graph techniques, programming, and more, its significance and need will increase exponentially as diverse domains increase their adoption rates.

Conclusion

These are the most significant high-level considerations while developing data models. The most essential piece of advice I can provide is to continually examine how to produce a better product for consumers; analyze the requirements and experiences of users and strive to create a data model that best serves these concerns.

While having a big toolbox of data modeling approaches and styles is advantageous, rigid adherence to any one set of principles or methodology is typically inferior to a flexible approach based on your organization's specific requirements.

.avif)

.png)

.png)

.png)

.webp)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

%20(1).avif)

.avif)

%20(1).avif)

%20(1).avif)

.avif)

.avif)